"Students Use AI. Teachers Do Not."

Two years later, neither ChatGPT nor generative AI live up to the hype.

This weekend marked the second anniversary of ChatGPT’s public release. The occasion spurred at least two articles questioning generative AI’s impact relative to its hype.

Bloomberg’s Parmy Olson and Carolyn Silverman wrote that generative AI’s value to the world is still unclear, but it has been a bonanza for the largest tech firms:

ChatGPT’s $8 Trillion Birthday Gift to Big Tech by Parmy Olson and Carolyn Silverman, Bloomberg, November 29, 2024.

Megan Morrone of Axios also questioned AI’s impact after two years, “Outside a handful of specific fields, it's hard to make the case that it has transformed the world the way its promoters promise.”

How ChatGPT changed the future by Megan Morrone, Axios, November 30, 2024.

Something Morrone wrote sums up the state of AI usage in K-12.

“While students are regularly using genAI, teachers are not. Education Week recently found that educators' use of artificial intelligence tools in the classroom has barely changed in the last year.” - Megan Morrone. Axios, November 30, 2024.

Is it true that students use generative AI and teachers do not? If so, what can we make of those facts?

Students Use AI

Morrone linked to an Axios article citing a Common Sense Media report to support her claim that “Students are regularly using AI.” In January 2024, Common Sense Media partnered with OpenAI, making their claims suspect (read on for more).

However, there is evidence that K-12 students use generative “AI.” In August, The Washington Post shared its finding that more than 1 in 6 chatbot conversations are students seeking help with homework.

Marc Watkins attended OpenAI’s Education forum, where he learned (bold formatting added by me):

“OpenAI’s Education Forum was eye-opening for a number of reasons, but the one that stood out the most was Leah Belsky acknowledging what many of us in education had known for nearly two years—the majority of the active weekly users of ChatGPT are students. OpenAI has internal analytics that track upticks in usage during the fall and then drops off in the spring. Later that evening, OpenAI’s new CFO, Sarah Friar, further drove the point home with an anecdote about usage in the Philippines jumping nearly 90% at the start of the school year.” - Marc Watkins, October 14, 2024.

Side note: If most of ChatGPT’s active users are students, how is that a revolutionary, money-making business? But I digress.

This student usage begs the question: Why?

Arguably, the best use case for synthetic text extruding machines is writing the author neither wants to do nor cares about. Does that sound like a middle or high school student?

So much of K-12 student writing is “at gunpoint.” Of course, there is great pedagogical value in students writing. Teachers need to balance writing’s pedagogical value with student choice and autonomy. I do not have answers to this dilemma, but I shared some ideas in this post.

Pedagogy, Thinking, And The First Draft Or What Teachers Should Consider About AI And Writing

“When it comes to writing, what matters is the writing.” - Author John Warner, February 1, 2024.

Before getting upset with students about using AI, please consider this quote from 27-year-old YouTuber Eddy Burback, one I referenced in my post about talking to students about AI.

“…When I was in school, I was stressed and overwhelmed, and I hated the amount of time school took up of my day. So when I had the opportunity occasionally to take a shortcut, it felt rebellious. It felt like I was claiming back a part of my life that I couldn't control. - Eddy Burback.

Teachers Do Not Use AI

In October, I asked teachers to wear their Critical Thinking 🧢 in the face of AI hype. I asked them to push back and ask questions as employees at the US National Archives did.

National Archives Employees Wore Their Critical Thinking 🧢 - Teachers Can Too.

404 Media is one the best sources for following “AI” and technology. They do outstanding work. For example, 404 recently documented that National Archives and Records Administration (NARA) employees witnessed “AI-mazing Tech-venture” in June 2024…

I did not need to ask teachers to be critical thinkers. They already are as evidenced by the Education Week survey Morrone cited, which shows that most teachers do not use generative AI tools. Dan Meyer wrote a great breakdown of the survey showing that teachers’ generative AI use has not budged for a year:

The lack of teacher AI use begs a different question: How does teachers not using generative AI demonstrate critical thinking?

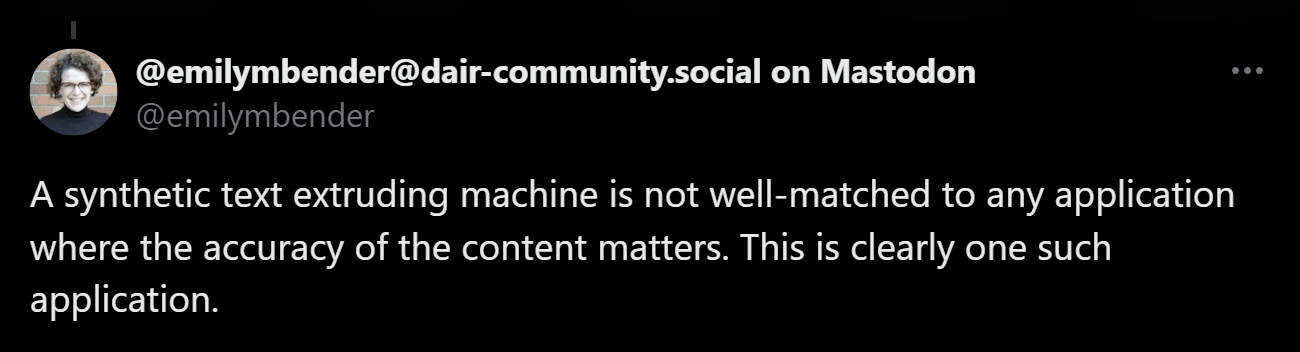

University of Washington Computational Linguist Dr. Emily M. Bender said about large language models:

“A synthetic text extruding machine is not well-matched to any application where the accuracy of the content matters.” - Dr. Emily M. Bender, April 18, 2024.

Do you know any teachers whose tasks depend on accuracy? Do your tasks depend on accuracy?

Dr. Bender wrote about Common Sense Media’s ChatGPT Foundations for K–12 Educators course:

“ChatGPT won't improve your teaching, won't save you time (anymore than not doing your job would save you time), and doesn't represent a key skill set that your students must have, lest they be left behind.

K-12 educators can and should resist the sales pitch.” - Dr. Emily M. Bender, November 22, 2024.”

Noam Chomsky said of ChatGPT, “I don’t think it has anything to do with education, except undermining it.”

Is it possible teachers’ generative AI usage is low because they heed the warnings of Bender, Chomsky, and other experts such as Dr. Timnit Gebru?

Continuing the Conversation

What do you think? How are you contending with students using AI? What is factoring into your decision to use, or more likely, not use AI? Comment below or connect with me on BlueSky: tommullaney.bsky.social.

Does your school or district need the assistance of a tech-forward educator who prioritizes critical thinking? I would love to work with you. Reach out on BlueSky, email mistermullaney@gmail.com, or check out my professional development offerings.

Blog Post Image: The blog post image is by Taylor Flowe on Unsplash.

AI Disclosure:

I wrote this post without the use of any generative AI. That means:

I developed the idea for the post without using generative AI.

I wrote an outline for this post without the assistance of generative AI.

I wrote the post using the outline without the use of generative AI.

I edited this post without the assistance of any generative AI. I used Grammarly to assist in editing the post. I have Grammarly GO turned off.