John Mulaney's AI In Education Joke Should Give Teachers Pause

A comedian gets to the heart of the matter.

The In-Service Day

Imagine a whole-school or whole-district in-service day. Maybe Election Day. Hundreds of teachers listen to an AI-in-Education company present “30 AI Apps in 30 Minutes” to a crowded auditorium. Or an EDU celeb delivers an emotional keynote imploring teachers to transform schools to meet the needs of students in this AI era.

Even though OpenAI’s marketing team could not be happier with the messaging the audience heard, the organization did not contribute a dime to pay for the event.

Then, the superintendent tells the audience they have a special surprise. The teachers will now hear from comedian John Mulaney.1

If you are unfamiliar with Mulaney’s stand-up act, please watch this five-minute clip.

Mulaney saw the AI presentation. He grabs the microphone and says he has a young son. Then he thanks the assembled teachers,

“…For the world you’re creating for my son … where he will never talk to an actual human again. Instead, a little cartoon Einstein will pop up and give him a sort of good answer and probably refer him to another chatbot.”

Imagine hearing a joke like that after a session about the exciting potential of “AI” for classroom instruction. What would it conjure for you?

Mulaney told this joke to techies at the Salesforce Dreamforce conference last week.

Why Mulaney’s Joke Is So Spot On

I like to consider the perspectives of those outside K-12 when thinking about education. Understanding that outsiders are usually not pedagogical experts, fresh perspectives have value. My last post quoted Taylor Swift, even though I mostly quote academics and researchers in this space.

I also quoted artists’ takes on generative “AI.”

Generative “AI” can be a dreary subject2 so I have also quoted comedian Anesti Danielis and funny YouTuber Eddy Burback in past posts. And now John Mulaney.

Why does Mulaney’s joke ring so true? Why would Mulaney reference his child and education in a joke about “AI”? Why should this joke give teachers pause?

The joke highlights two widely suggested “AI” in education use cases.

“He will never talk to an actual human again.”

As Dan Meyer said, “The AI tutor space is incredibly crowded right now…” We often hear that AI will make a great tutor even if we’re also told that we must verify every claim Large Language Models generate. I don’t want a tutor whose every claim I have to confirm or who won’t say who won the 2020 US presidential election, but “never talk to an actual human again” is hyperbole.

Rarely do “AI” enthusiasts claim students do not need human teachers. But there is a kernel of truth here. How often do we hear “human in the loop” in “AI” education conversations? If someone is “in the loop,” aren’t they just a side contributor, not the driving force?

How comfortable are we with chatbots being the panacea for what ails K-12 education? Especially when, as Meyer explained, students aren’t interested in learning from them.

“A little cartoon Einstein will pop up and give him a sort of good answer.”

This speaks to a suggested “AI” education use case and an inherent flaw with Large Language Models (LLMs).

First the use case: The idea that LLMs can mimic historical figures. I wrote a deep dive into the pedagogical implications.

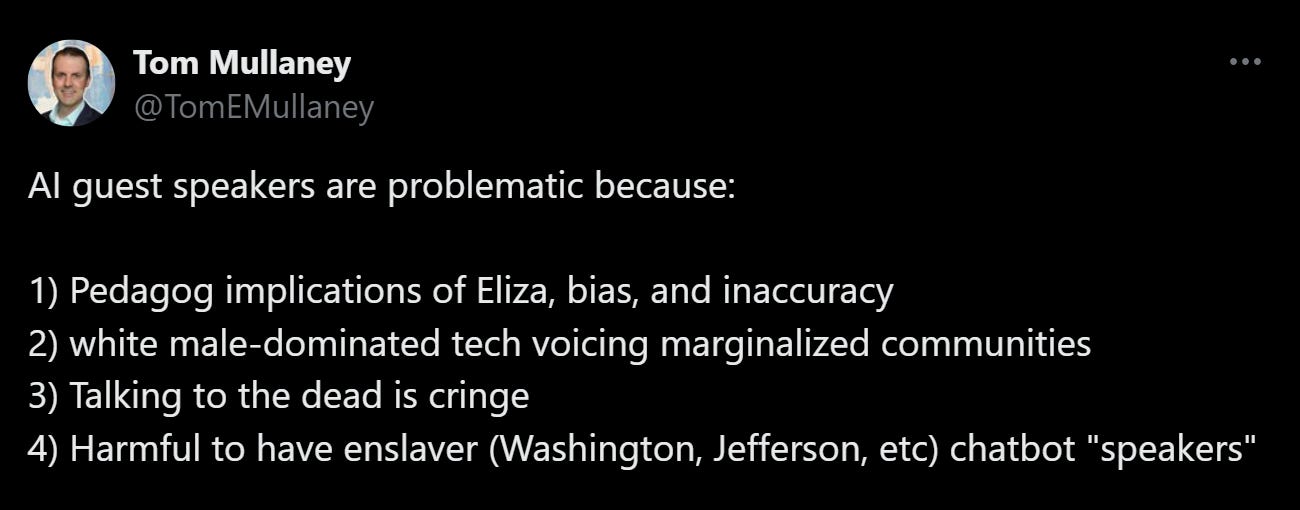

I also Tweeted a condensed list of reasons this strategy is problematic.

The “sort of a good answer” part of the joke strikes at an inherent flaw of LLMs - inaccuracy, unfortunately, known as “hallucinations.”

As Computational Linguist Dr. Emily M. Bender of the University of Washington said, “A synthetic text extruding machine is not well-matched to any application where the accuracy of the content matters.” Accuracy doesn’t matter in K-12 education, right?

Dr. Bender refers to an LLM as a “synthetic text extruding machine.”3 This complements what Mulaney said with “sort of a good answer.” When students use chatbots as a source of information, they get possibly accurate information, maybe? Is it information if no one wrote it and there is no intention behind it? Thinking about this as a former Social Studies teacher, I want to scream: Use a primary source document or do some actual research!

Self-checkout Counters

Mulaney said one more thing to the techies reminiscent of a vision of K-12 education where children regularly interact with chatbots.

“You look like a group who looked at the self-checkout counters at CVS and thought, ‘This is the future.’”

Self-checkout counters do not fundamentally harm the customer checkout experience.

Can we confidently say the same about chatbots and the student education experience?

Continuing The Conversation

What do you think? How are you using critical thinking to address “AI” this school year? Comment below or Tweet me at @TomEMullaney.

Does your district’s in-service day need a tech-forward speaker who critically examines AI and pedagogy? Check out my professional development offerings, reach out on Twitter, or email mistermullaney@gmail.com.

Post Image: The post image is Mulaney performing at a Joining Forces event at Joint Base Andrews in 2016 from the Wikimedia Commons. I edited the image with Procreate.

AI Disclosure:

I wrote this post without the use of any generative AI. That means:

I developed the idea for the post without using generative AI.

I wrote an outline for this post without the assistance of generative AI.

I wrote the post from the outline without the use of generative AI.

I edited this post without the assistance of any generative AI. I used Grammarly to assist in editing the post. I have Grammarly GO turned off.

There are no generative AI-generated images in this post.

No relation to the author. Notice the woefully insufficient number of Ls in his last name.

The environmental racism, theft, and worker exploitation inherent to generative “AI” are heavy subjects.

A more accurate description of them, in the author’s opinion.

This reminds me of E. B. White’s line “Humor can be dissected, as a frog can, but the thing dies in the process.” I like what you did here, and I think there is real value in writing and speaking humorously about what Silicon Valley is trying to make us believe about generative AI.

As you say, so much of what we skeptics believe is serious and heavy. Skeptics struggle with the weight of what they must say in response to the hype. Restating that hype in ways that reveal its absurdity is perhaps more effective.

We are being force fed AI it’s almost like none of us ever read 1984.