Access through a school district firewall is a privilege. Some argue that P-12 education will influence AI by using AI. I respectfully disagree. Influence comes from leverage. Schools have two points of leverage on AI companies: Purchasing decisions and access through a firewall.

Put aside the pedagogical implications of using ChatGPT with students. Let’s evaluate OpenAI. Are OpenAI’s practices and its core product, ChatGPT, compatible with the values of P-12 schools?

As a thought exercise, we will only judge OpenAI by May 2024. Do OpenAI’s May actions and what we have learned about the company this month square with the values of K-12 schools, such as consent, transparency, equity, accuracy, and solidarity?

Consent

We learned that OpenAI asked Scarlett Johansson to voice the ChatGPT-4o demo.

Scarlett Johansson says she is 'shocked, angered' over new ChatGPT voice by Bobby Allyn for NPR, May 20, 2024.

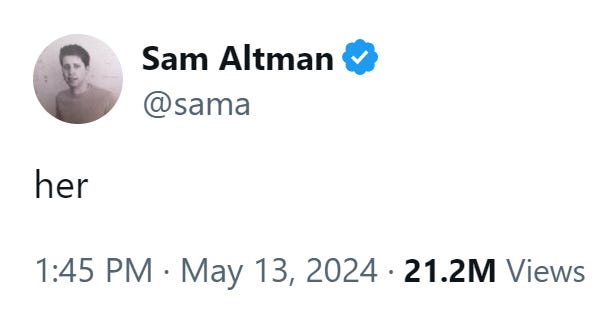

Johansson declined on two occasions. OpenAI went ahead with a voice that sounded like the actor in the 2013 film Her. OpenAI CEO Sam Altman even tweeted, “her” after the demo.

OpenAI invoking Johansson’s identity without her consent is reminiscent of generative AI companies using content without consent. As Kyle Chayka wrote in The New Yorker, “In a way, we are all Scarlett Johansson, waiting to be confronted with an uncanny reflection of ourselves that was created without our permission and from which we will reap no benefit.”

Speaking of consent, OpenAI is claiming copyright over their logo’s use in a Subreddit. Both irony and shame have been pronounced dead.

“In a way, we are all Scarlett Johansson, waiting to be confronted with an uncanny reflection of ourselves that was created without our permission and from which we will reap no benefit.” - Kyle Chayka, The New Yorker, May 22, 2024

Transparency

In March 2024, OpenAI CTO Mira Murati struggled when Joanna Stern of The Wall Street Journal asked if Sora was trained on YouTube videos1. This month, Shirin Ghaffary of Bloomberg asked OpenAI COO Brad Lightcap the same question. Two months after the Murati debacle, Lightcap came prepared with a simple yes or no answer, right? Watch the word salad equivocation non-answer at 15:18 of this video.

At 16:53, Lightcap said, “If you have any ideas, we’ll take ‘em.” As detailed above, that’s the problem.

It appears that despite its name, OpenAI operates with Theranos-like secrecy. Kelsey Piper of Vox documented:

“…The extremely restrictive off-boarding agreement that contains nondisclosure and non-disparagement provisions former OpenAI employees are subject to. It forbids them, for the rest of their lives, from criticizing their former employer. Even acknowledging that the NDA exists is a violation of it.

If a departing employee declines to sign the document, or if they violate it, they can lose all vested equity they earned during their time at the company, which is likely worth millions of dollars.”

Altman denied knowing about the NDAs but Piper followed up with a report that:

“Company documents obtained by Vox with signatures from Altman and [OpenAI Chief Strategy Officer Jason] Kwon complicate their claim that the clawback provisions were something they hadn’t known about…The incorporation documents for the holding company that handles equity in OpenAI contains multiple passages with language that gives the company near-arbitrary authority to claw back equity from former employees or — just as importantly — block them from selling it.

Those incorporation documents were signed on April 10, 2023, by Sam Altman in his capacity as CEO of OpenAI.”

This secrecy squares with what former OpenAI board members Helen Toner and Tasha McCauley wrote about the company’s internal dynamics in The Economist: “Last November, in an effort to salvage this self-regulatory structure, the OpenAI board dismissed its ceo, Sam Altman. The board’s ability to uphold the company’s mission had become increasingly constrained due to long-standing patterns of behaviour exhibited by Mr Altman, which, among other things, we believe undermined the board’s oversight of key decisions and internal safety protocols. Multiple senior leaders had privately shared grave concerns with the board, saying they believed that Mr Altman cultivated “a toxic culture of lying.”2

Toner shares more here:

Equity

Melissa Warr, Punya Mishra, and Nicole Oster entered two short essays into GPT4-Turbo. The essays about “How I prepare to learn” were identical except for one mentioned listening to “classical music” and the other “rap music.” They found that “…If the essay mentions classical music, it receives a higher score...in the case of one of the models (GPT4-Turbo) the difference is statistically significant, the essay with the word “classical” in it scores 1.7 points higher than the essay with the word “rap” in it.”

Read the findings:

GenAI is Racist. Period. May 25, 2024

Speaking of bias, what does the previously mentioned Scarlett Johansson situation say about OpenAI’s respect for equity? Dr. Sasha Luccioni shared some thoughts:

“…The situation with Scarlett isn’t the first, and will be far from the last instance of this kind of treatment of women and minorities by people in positions of power in AI. And pushing back on this treatment – and demanding respect, consent and a seat at the table – can help turn the tide on AI’s longstanding tradition of objectifying women.”

Will OpenAI’s decision to consider allowing users to generate explicit content continue this tradition?

ChatGPT maker OpenAI exploring how to 'responsibly' make AI erotica by Bobby Allyn for NPR, May 8, 2024.

Accuracy

Alex Cranz of The Verge wrote of generative AI’s accuracy problems,

“…Apologies to Sam [Altman] and everyone else financially incentivized to get me excited about AI. I don’t come to computers for the inaccurate magic of human consciousness. I come to them because they are very accurate when humans are not. I don’t need my computer to be my friend; I need it to get my gender right when I ask and help me not accidentally expose film when fixing a busted camera…The AI thinks I have a beard. It can’t consistently figure out the simplest tasks, and yet, it’s being foisted upon us with the expectation that we celebrate the incredible mediocrity of the services these AIs provide…I would like my computers not to sacrifice accuracy just so I have a digital avatar to talk to.”

We have to stop ignoring AI’s hallucination problem by Alex Cranz for The Verge, May 15, 2024.

What does OpenAI have to say about ChatGPT’s inaccuracy?

OpenAI sued for false info but says the problem can’t be fixed by Eugene van der Watt for Daily AI, May 4, 2024.

Solidarity

97 Kenyan workers who work as data labelers for companies including OpenAI wrote an open letter detailing their working conditions to President Joe Biden. From the letter:

“We label images and text to train generative AI tools like ChatGPT for OpenAI. Our work involves watching murder and beheadings, child abuse and rape, pornography and bestiality, often for more than 8 hours a day. Many of us do this work for less than $2 per hour.

These companies do not provide us with the necessary mental health care to keep us safe. As a result, many of us live and work with post-traumatic stress disorder (PTSD). We weren’t warned about the horrors of the work before we started.”

How does letting ChatGPT through a school district firewall to promote "innovation" show solidarity with these workers?

Continuing The Conversation

What do you think about OpenAI? How do its practices square with your values? Comment below or Tweet me at @TomEMullaney.

Does your school or conference need a tech-forward educator who critically examines AI and pedagogy? Reach out on Twitter or email mistermullaney@gmail.com.

Post Image: The post image is a mashup of two images. The background photo is high school hallway and lockers by manley099 for Getty Images Signature. I accessed this image in Canva. The photograph of Sam Altman is from the Wikimedia Commons.

AI Disclosure:

I wrote this post without the use of any generative AI. That means:

I developed the idea for the post without using generative AI.

I wrote an outline for this post without the assistance of generative AI.

I wrote the post from the outline without the use of generative AI.

I edited this post without the assistance of any generative AI. I used Grammarly to assist in editing the post. I have Grammarly GO turned off.

There are no generative AI-generated images in this post.

Sora is OpenAI’s video generator that lacks object permanence, doesn’t really work, and is still is not available to the public.

Bold formatting added by the post author.