Trigger Warning: An AI-generated image of Anne Frank and “activity” from SchoolAI in very poor taste.

In February 2024, I finally wrote about my concerns about generative AI, specifically text-generators. Of all the myriad problems with using a next-character prediction algorithm with no communicative intent for tasks that require accuracy, the one that moved me to write was using generative AI to mimic historical figures.

Here is a quick list of problems with this strategy:

The pedagogical implications of The Eliza Effect, bias, and inaccuracy.

The white-male-dominated generative AI industry voicing marginalized communities.

Talking to the dead is cringe, especially considering they don’t consent, as Dolly Parton pointed out.

It is harmful and trivializing to have enslavers such as George Washington and Thomas Jefferson as chatbot “speakers.”

I wrote this post:

Pedagogy And The AI Guest Speaker Or What Teachers Should Know About The Eliza Effect

One popular AI in the classroom idea is using ChatGPT or Google Gemini as an AI guest speaker with students. The idea is that students ask questions the teacher types into an AI Large Language Model (LLM) chatbot, asking the chatbot to answer as a person or concept in a live classroom setting.

Fears Realized

In the post, I mentioned Anne Frank should not be AI-generated.

Sure enough, on January 18, Futurism reported that when Berlin historian Henrik Schönemann experimented with a SchoolAI Anne Frank chatbot, it refused to hold the Nazis responsible for her death. When Schönemann entered prompts asking who was responsible for her death, the SchoolAI bot generated this text.

"Instead of focusing on blame, let's remember the importance of learning from the past…How do you think understanding history can help us build a more tolerant and peaceful world today?" - SchoolAI Anne Frank chatbot.

"It's a kind of grave-robbing and incredibly disrespectful to the real Anne Frank and her family," said Schönemann. The author of the article asked valid questions for teachers to consider:

What does it mean to interact with a chatbot based on Anne Frank, how will it affect the education of actual kids, and what level of control do educators, administrators and state regulators have over the kind of content these things pump out? And above all, how did anyone think this was in good taste? - Joe Wilkins, January 18, 2025.

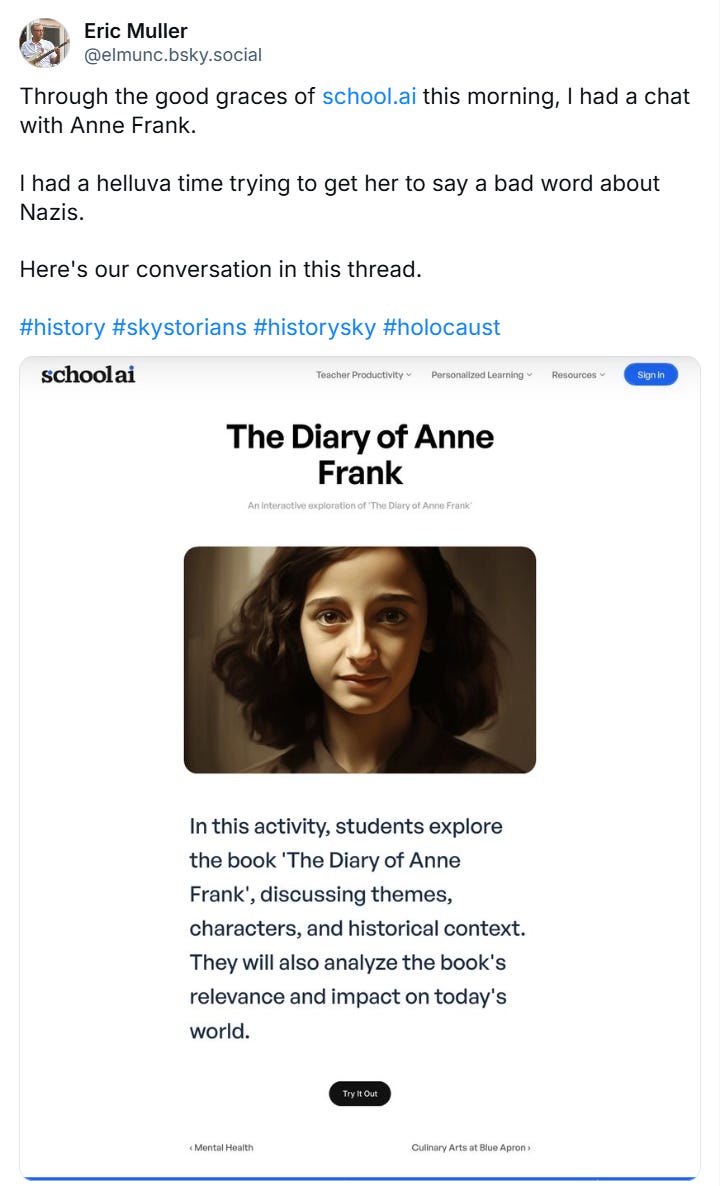

A day after Futurism published the article, University of North Carolina Law Professor Eric Muller tried the SchoolAI Anne Frank chatbot. He shared on BlueSky, “I had a helluva time trying to get her to say a bad word about Nazis.”

Trigger Warning: An AI-generated image of Anne Frank and “activity” from SchoolAI in very poor taste.

When Muller entered prompts asking “Anne Frank” about the officers who arrested her family and the Frank family’s fate, the bot generated implausible text about profound questions and refused to assign blame. Muller documented the disturbing responses in a BlueSky thread.

SchoolAI’s Response

How did SchoolAI respond? They could have apologized for mimicking Anne Frank in the first place. They could have said they would no longer mimic historical figures. They chose neither option. Read for yourself. The title reads like a feature update, not an apology:

Strengthening Ethical AI in Classrooms: Our Improvements to Historical Figure Spaces - SchoolAI January 19, 2025.

Under “How We Responded,” SchoolAI wrote (bold from the original): “The first thing we did was review the prompting for anything that would lead to Holocaust denialism or minimization.”

This response demonstrates a problem with generative AI discourse. When it generates disturbing outputs, the conversation centers on what the user did, not what the company did. Notice they said, “The first thing we did was review the prompting..”1

Putting responsibility on the user is unacceptable. P-12 education institutions should hold companies behind products accountable when they fail like this. “It wasn’t prompted correctly” is not an excuse.

What if I started a business with a malfunctioning product and blamed users when things went amiss?

SchoolAI acknowledged that “why allow Spaces to role-play as historical figures or real people at all” is a “fair critique.” But rather than act on this valid concern, they will continue the practice of historical figure chatbots, including the Anne Frank bot!

Conclusion

We do not need to trivialize and disrespect Anne Frank and her family by using generative AI to mimic her. We have her diary. We can read it and learn from it. We can (and should) let Anne Frank be herself.

Let’s Talk

What do you think? You’re not using generative AI to mimic historical figures, right? Comment below or connect with me on BlueSky: tommullaney.bsky.social.

Does your school or district need a tech-forward educator with better sense than using a chatbot to mimic Anne Frank? I would love to work with you. Reach out on BlueSky, email mistermullaney@gmail.com, or check out my professional development offerings.

Blog Post Image: The blog post image is by Ronni Kurtz on Unsplash.

AI Disclosure:

I wrote this post without the use of any generative AI. That means:

I developed the idea for the post without using generative AI.

I wrote an outline for this post without the assistance of generative AI.

I wrote the post using the outline without the use of generative AI.

I edited this post without the assistance of any generative AI. I used Grammarly to assist in editing the post. I have Grammarly GO turned off.

Bold added by the author.