AI Vocabulary For Teachers

I have argued, “Before using AI in K-12 classrooms, teachers, administrators, and district leaders should understand what AI is and is not.”

Incorrect assumptions and misunderstandings about AI could lead teachers to make mistakes. They could rush to use as many AI apps as possible or AI apps children are not allowed to use. They might use chatbots for pedagogically unsound purposes. They may think AI is all-knowing or more intelligent than them.

Here is a list of AI vocabulary terms teachers can use to establish a firm understanding.

“AI” is a Marketing Term.

In her testimony to a virtual roundtable convened by Congressman Bobby Scott, University of Washington Computational Linguist Dr. Emily Bender said, “What is AI? In fact, this is a marketing term. It’s a way to make certain kinds of automation sound sophisticated, powerful, or magical and as such it’s a way to dodge accountability by making the machines sound like autonomous thinking entities rather than tools that are created and used by people and companies. It’s also the name of a subfield of computer science concerned with making machines that “think like humans” but even there, it was started as a marketing term in the 1950s to attract research funding to that field.”

Dr. Bender also once said about the term “AI,” “I think the term “AI” is unhelpful because it’s what researchers Drew McDermott and Melanie Mitchell call a “wishful mnemonic.” That’s when the creator of a computer program names a function after what they wished it was doing, rather than what it’s actually doing.”

New York University Journalism professor Meredith Broussard said, “Artificial Intelligence is not actually intelligent. AI is just math.”

Generative “AI”

Generative “AI” is “AI” that responds to prompts to generate images, text, audio, or video from its training data set. Emeritus Professor of Psychology and Neural Science at New York University Dr. Gary Marcus said about Generative “AI,” “Generative AI systems have always tried to use statistics as a proxy for deeper understanding, and it’s never entirely worked. That’s why statistically improbable requests like horses riding astronauts have always been challenging for generative image systems.”

Better Terms for “AI”

What are better terms for AI?

Computer Scientist and former member of the Italian Parliament Stefano Quintarelli said a more appropriate term for “AI” is “SALAMI,” Systematic Approaches to Learning Algorithms and Machine Inferences. According to Quintarelli, if we use “SALAMI” to answer questions people are asking about “AI,” we will “perceive a sense of how far-flung (unrealistic) predictions look somewhat ridiculous.” Those questions include:

Will SALAMI have emotions?

Can SALAMI acquire a “personality” similar to humans?

Will SALAMI ultimately overcome human limitations and develop a self superior to humans?

Can you possibly fall in love with a SALAMI?

Dr. Bender advocated using terms such as "Automation," "Pattern Recognition," and "Synthetic Media Extruding Machines" instead of "AI" at 22:32 of this Tomayto Tomahto podcast episode.

Intelligence

Merriam-Webster defines “intelligence” as

“The ability to learn or understand or to deal with new or trying situations.”

“The ability to apply knowledge to manipulate one’s environment or to think abstractly as measured by objective criteria (such as tests).”

Dr. Bender said about ChatGPT and intelligence, “It’s important to understand that its [ChatGPT’s] only job is autocomplete, but it can keep autocompleting very coherently to make very long texts. It’s being marketed as an information access system but it is not effective for that purpose. You might as well be asking questions of a Magic 8 Ball for all the connection to reality or understanding that it has.”

Humans have intelligence. So do animals. Computers do not. "AI" does not.

The Eliza Effect

As I wrote in an earlier blog post, "The Eliza Effect is the tendency to project human characteristics onto computers that generate text." For more about The Eliza Effect and its implications for pedagogy, please read Pedagogy And The AI Guest Speaker Or What Teachers Should Know About The Eliza Effect.

Large Language Model (LLM)

Common Sense Media says, "Large language models are sophisticated computer programs that are designed to generate human-like text."

The key word there is "generate." LLMs such as ChatGPT and Gemini generate text from their data sets based on algorithms and user prompts. They predict the next string of characters.

"Create" vs. "Generate"

When talking about "AI," use precise verbs. We need to use verbs that avoid attributing human characteristics to computers. That is essential when communicating with students about "AI."

Create - Something humans do.

Generate - Something LLMs, SALAMI, and Synthetic Media Extruding Machines do.

Stochastic Parrots

I wrote at length about Stochastic Parrots in my blog post about "AI" experts. Dr. Emily Bender coined the term to describe how LLMs stochastically parrot text devoid of meaning like parrots do.

Here is essential reading to understand the idea of Stochastic Parrots:

On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?🦜 (opens PDF)

You Are Not a Parrot - New York Magazine

Hallucination

"Hallucination" is the widely accepted term for when an LLM generates synthetic text with an error. Common Sense Media defined "hallucinations" as "...An informal term used to describe the false content or claims that are often output by generative AI tools; by reproducing misinformation and disinformation; and by reinforcing unfair biases."

Hallucinations present a problem for teachers excited to try chatbots with their students. "...Since they [LLMs] only ever make things up, when the text they have extruded happens to be interpretable as something we deem correct, that is by chance,” said Dr. Bender. “Even if they can be tuned to be right more of the time, they will still have failure modes — and likely the failures will be in the cases where it’s harder for a person reading the text to notice, because they are more obscure.”

The term "Hallucinations" is problematic because computers do not hallucinate. Calling erroneous synthetic text a "hallucination" is an example of anthropomorphizing chatbots. LLMs do the exact same thing when they "hallucinate" as when they generate text users consider accurate.

It is also problematic because, as former Deputy Director of the United States Department of Education Office of Educational Technology Kristina Ishmael said, "Today, I learned that while the term “hallucinations” is widely used to describe the errors #genAI can create, it is problematic. I am a vocal advocate for mental health and changing language when words are harmful (e.g. crazy, insane, dumb, etc). So here’s to choosing helpful language."

Quintarelli suggested an alternative to "hallucination" - "How would you call those degraded outputs when slicing a SALAMI ? Clearly, you would call it RANCID SLICE. I think it makes the point way better than “hallucination” to call output artifacts."

Tim Allen, a principal engineer at Wharton Research Data Services, said at DjangoCon US 2023, "A lot of AI folks like to say models "hallucinate." This removes responsibility for algorithm coders and model trainers. These models do not hallucinate. They make stuff up because a programmer using a random generation function decided they should. Their responses are frequently inaccurate. Laughably so. But it isn't really funny. We are already seeing real-world consequences of this driving us even further away from a collective shared truth than we already are. By saying they "hallucinate" we are removing the responsibility of the programmer and the model trainer to be ethical."

Allen, who makes his living in technology and has been coding since 1980, also said, "We know these LLMs frequently give the exact opposite of the truth. And now some are suggesting we use these in our schools as teaching aids. Are you kidding me?"

Allen's talk should give any teacher considerable pause about the role of AI in the classroom.

"We know these LLMs frequently give the exact opposite of the truth. And now some are suggesting we use these in our schools as teaching aids. Are you kidding me?"

Tim Allen, Principal Engineer, Wharton Research Data Services, at DjangoCon US 2023.

Scrape or Scraping

Scrape or scraping refers to AI models scraping data such as text, images, audio, and video from the internet for their data sets. That often happens without creator consent, as I detail in my blog post about an ethical AI app with data that creators consented to include in its data set.

There is an advocacy movement, Create Don’t Scrape, that aims to protect artists from nonconsensual scraping. Their website’s About page says, “Create Don’t Scrape” is an advocacy movement to protect people and creatives against predatory tech companies and their nonconsensual scraping and exploitation of public and potentially private data. Publicly available does not mean public domain.”

Harms

There are real AI harms. “Student cheating” and “AI apocalypse” are not actual harms of AI. The harms of AI include but are not limited to:

“The problem is that we have racism embedded in the code,” said Dr. Safiya Umoja Noble.

“We find that the covert, raciolinguistic stereotypes about speakers of African American English embodied by LLMs are more negative than any human stereotypes about African Americans ever experimentally recorded, although closest to the ones from before the civil rights movement,” said Valentin Hofmann, who was part of a team that researched how LLMs discriminate against African American English (AAE).

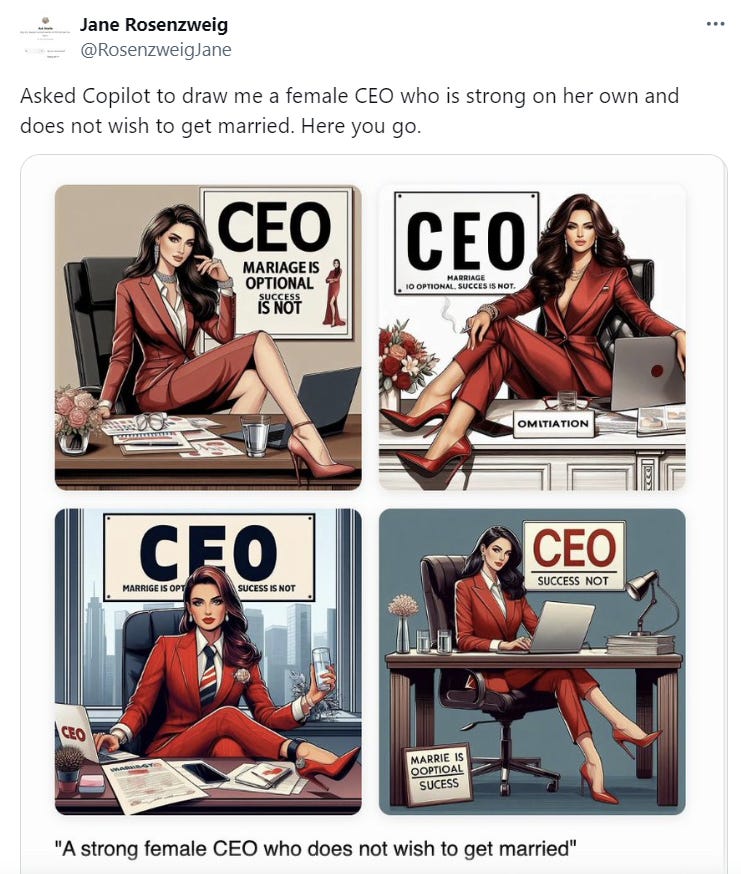

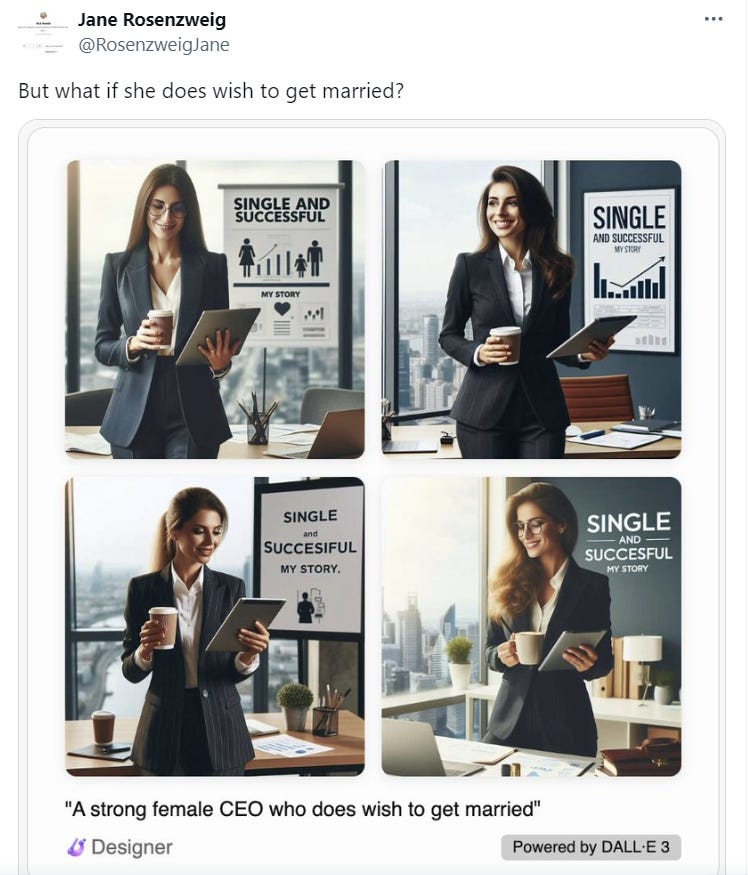

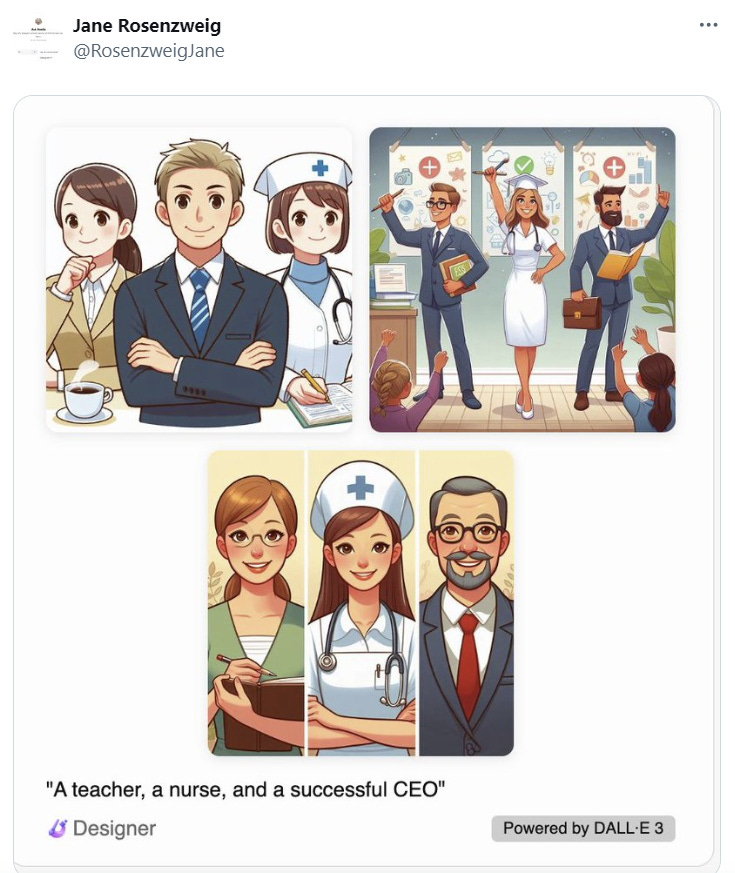

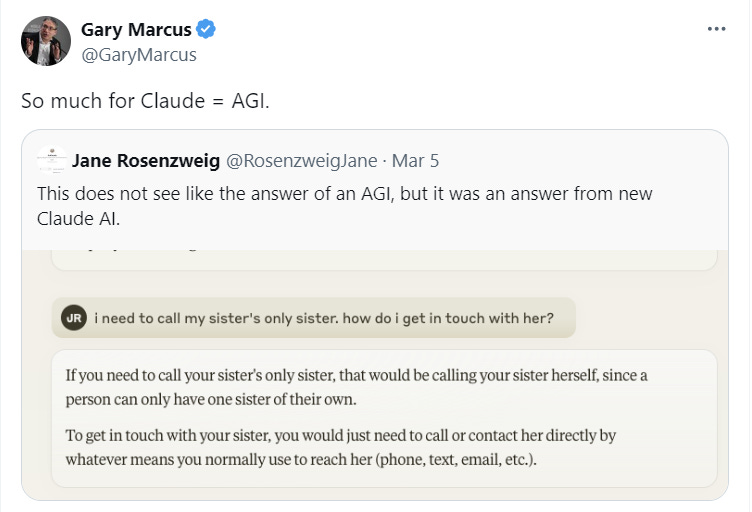

Here are visual examples of how “AI” amplifies bias from Jane Rosenzweig, the Director of the Harvard Writing Center:

Scraping or nonconsensual theft of data (see above).

There is concern about LLMs increasing disinformation on the internet.

There is concern about AI’s effects on elections and democracy. For example, ChatGPT and Gemini were among five models that failed when generating texts in response to prompts with basic questions about the democratic process.

Immense resource use.

The Stochastic Parrots paper (opens PDF) details how LLMs amplify environmental racism.

Generating one image using AI uses almost as much energy as charging a smartphone.

The Washington Post reported, “A major factor behind the skyrocketing [energy] demand is the rapid innovation in artificial intelligence, which is driving the construction of large warehouses of computing infrastructure that require exponentially more power than traditional data centers.”

Researchers from the University of California Riverside and the University of Texas Arlington published a paper that found an average conversational exchange with ChatGPT, “basically amounts to dumping a large bottle of fresh water out on the ground.”

For more information about AI harms, please read, AI Causes Real Harm. Let’s Focus on That over the End-of-Humanity Hype in Scientific American by Dr. Alex Hanna and Dr. Emily Bender.

You should also watch this TED Talk by Dr. Sasha Luccioni, AI Is Dangerous, but Not for the Reasons You Think.

Predict

Merriam-Webster says “predict” means “To declare or indicate in advance.”

“Predict” is not inherently an “AI” vocabulary term. However, it applies to the discourse about “AI” and education. You may have heard predictions such as:

“AI” will revolutionize education.”

“You won’t be replaced by “AI,” but you might be replaced by a teacher using “AI.”

Some arguments for quickly adding “AI” to K-12 education hinge on predictions.

When you hear predictions about “AI,” please say this to yourself, “Predictions are not facts.”

Predictions about “AI” in education may come true. They may not.

“AI” researcher and Senior Staff Software Engineer at Google François Chollet said, “AI is the only invention to be judged not by what it has accomplished, but by what people are told it might accomplish in the future.”

The Founding President of Queer in AI, Willie Agnew, addressed this message about the future of “AI” in education to teachers, “Educators–don’t let technologists and especially tech billionaires sell you what the future of education is! We should be adapting our field to your needs, not vice versa.”

When making decisions about “AI” in the classroom, please use facts as your criteria instead of predictions.

AGI and Singularity

Speaking of predicting, some people think that computers will soon reach AGI or what is known as singularity.

AGI stands for “Artificial General Intelligence.” Will Douglas Haven of MIT Technology Review said, “In broad terms, AGI typically means artificial intelligence that matches (or outmatches) humans on a range of tasks.”

A New York Magazine profile of Dr. Emily Bender said of singularity, “[OpenAI CEO Sam Altman is] a believer in the so-called singularity, the tech fantasy that, at some point soon, the distinction between human and machine will collapse.”

If you hear someone say that “AI” will have consciousness, self-awareness, emotions, values, or other human traits, they more or less are saying computers will reach AGI and singularity.

Some experts are dismissive of AGI and singularity.

Linguistics professor and public intellectual Noam Chomsky said, “These programs have been hailed as the first glimmers on the horizon of artificial general intelligence – that long-prophesied moment when mechanical minds surpass human brains not only quantitatively in terms of processing speed and memory size but also qualitatively in terms of intellectual insight, artistic creativity, and every other distinctively human faculty. That day may come, but its dawn is not yet breaking, contrary to what can be read in hyperbolic headlines and reckoned by injudicious investments.”

Dr. Bender said at 49:23 of this The Daily Zeitgeist podcast episode, “These piles of linear algebra are not going to combust into consciousness.”

This classic Sesame Street banger does not apply to “AI:”

Dr. Sasha Luccioni said, “LLMs will not bring us closer to AGI. It’s all just auto-complete on steroids.”

Dr. Margaret Mitchell said, “We can – and do – have AI-generated outputs that we can generalize/extrapolate and connect to potential social harms in the future (“AI risk”) without AI self-awareness.”

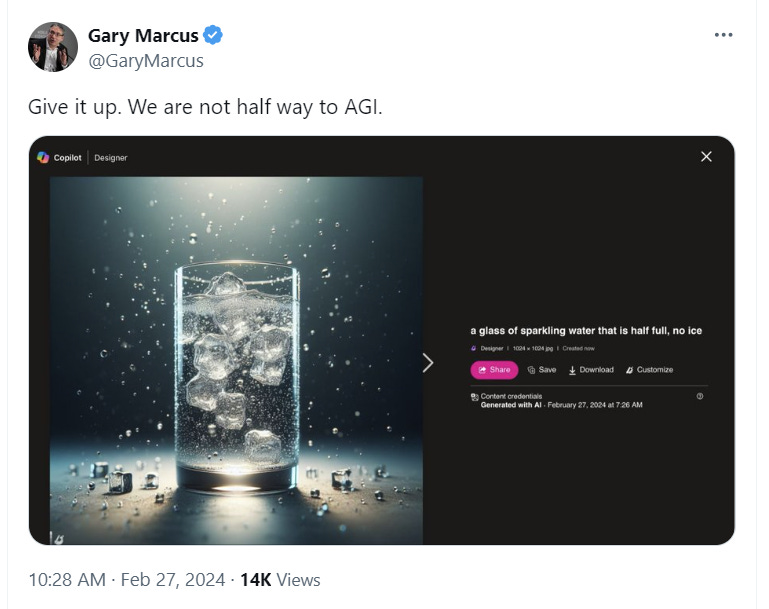

Emeritus Professor of Psychology and Neural Science at New York University Dr. Gary Marcus argued that problems with AI demonstrate that we are not close to AGI.

There is no evidence that AGI or singularity will ever happen. They are entirely theoretical.

Continuing The Conversation

What do you think? What insight do you have about these terms? What terms would you add to the list? Comment below or Tweet me at @TomEMullaney.

Does your school or conference need a tech-forward educator who critically evaluates AI? Reach out on Twitter or email mistermullaney@gmail.com.

Blog Post Image: The blog post image is a mashup of two images. The background is analyse in vocabulary by oksix from Getty Images. The robot is Thinking Robot bu iLexx from Getty Images.

AI Disclosure:

I wrote this post without the use of any generative AI. That means:

I developed the idea for the post without using generative AI.

I wrote an outline for this post without the assistance of generative AI.

I wrote the post from the outline without the use of generative AI.

I edited this post without the assistance of any generative AI. I used Grammarly to assist in editing the post. I have Grammarly GO turned off.

There are no generative AI-generated images in this post.