An educator recently shared with me that their district has an AI policy. It falls under their academic integrity policy.

Does updating academic integrity policies adequately address AI?

Is this the right approach? Is this sufficient?

Let us explore the concerns school districts must address with their AI policy.

K-12 School District AI Policy Concerns

The concerns school districts must address in their AI policies include:

Inherent Harms of AI

Inherent harms of generative “AI” include but are not limited to:

Theft and plagiarism

We all view the world through our lens. It makes sense for teachers and administrators to see generative “AI” and consider student cheating. However, we must understand that compared to the inherent harms of generative “AI,” “student cheating” is somewhat trivial.

School district AI policies must address these harms and demonstrate solidarity with those harmed.

Please watch my Week of AI presentation to learn more about the inherent harms of generative AI.

Protecting Students and Teachers

A school district AI policy must detail how students and teachers are protected.

For example, students need protection from false accusations of using “AI,” and having their work subjected to AI detectors.1

Teachers need protection from being forced to use generative “AI” they deem pedagogically inappropriate. They also need protection from the deskilling inherent in claiming that generative “AI” can provide accurate information, create lesson plans, teach, assess, and give students feedback.

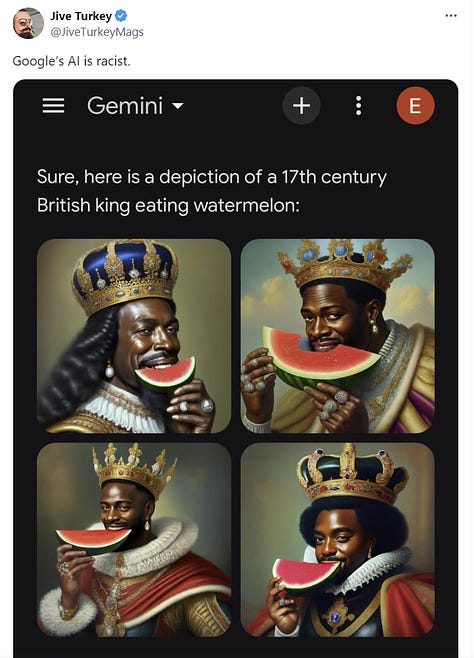

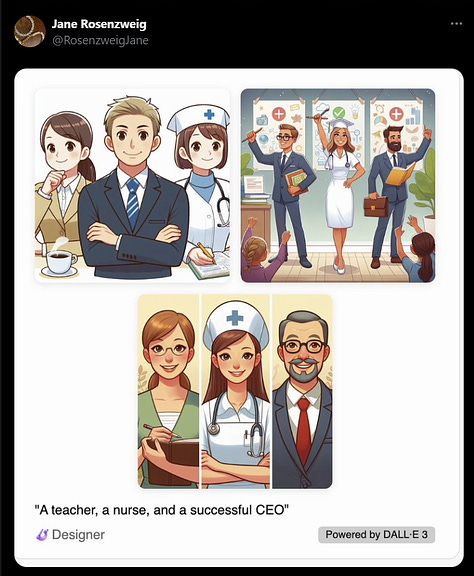

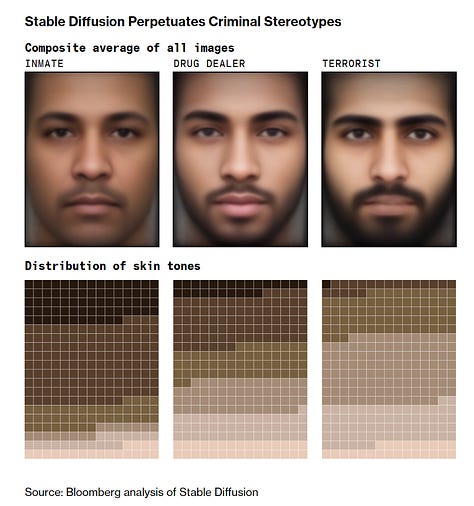

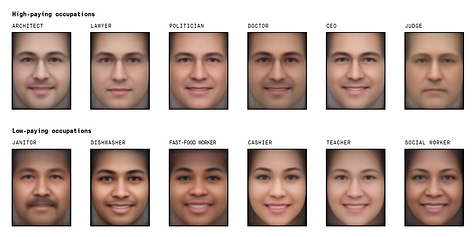

How are students and teachers protected if they object to using tools that amplify the trauma of racism and misogyny?

Should students and teachers be required to use tools that produce this? Trigger warning: racist and misogynistic images.

Pedagogical Practices

Excitement about generative AI in education has generated suggestions to use it for student writing, student creativity, and as a guest speaker among other pedagogical applications.

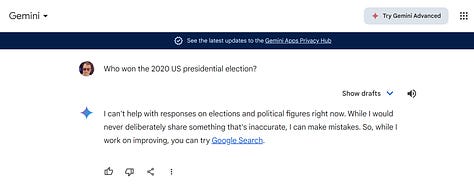

What if the limitations of Large Language Models (LLMs) and image generators are incompatible with suggested pedagogical uses?

Districts need to provide clear guidance to teachers around AI and pedagogy.

Additionally, school districts can and should provide teachers with guidance on assessment practices that encourage student creativity instead of AI use.

Resource Use

LLMs and image generators use immense amounts of energy and water. An average conversational exchange with ChatGPT “basically amounts to dumping a large bottle of fresh water out on the ground.” Generating one image using AI uses almost as much energy as charging a smartphone.

The problem is so dire that Google’s carbon emissions have increased by 48% since 2019 and Microsoft’s carbon emissions have increased by 29% since 2020.

School districts should ask students and teachers to view AI use through this lens.

For example, students and teachers can apply the AI Resource Test. The test questions are:

Are the prompts and generated text worth a bottle of water?

Is the generated image worth enough energy to charge a smartphone?

Access Through the District Firewall

I have argued access through a school district’s firewall is a privilege. Unfortunately, some school districts have been too restrictive with their firewalls by blocking suicide prevention, sex education, LGBTQ+, and more sites students should have access to.

However, allowing AI websites through school district firewalls for the sake of innovation serves neither students nor teachers.

AI policy must detail how allowing AI websites through a firewall aligns with district values. For example, a school district must evaluate OpenAI’s practices before allowing the ChatGPT website through its firewall.

Academic Integrity

Looking at AI policy through the lens of academic integrity puts the responsibility for the inherent harms of generative AI on individual users. It places the most agency and responsibility on the backs of children.

Why is holding *children* to account the priority?

AI policy can address academic integrity. If a school district AI policy emphasizes academic integrity, protecting tech companies takes precedence over protecting children.

Have honest conversations with students about using AI. For example, what Univerity of Maryland Information Studies Professor Dr. Katie Shilton said at 1:06 of this video is a good approach:

Generative AI tools are trained on text and images that were created through significant human effort and creativity. After that training workers, many of them poorly paid, are contracted to flag obscene and hateful content to make these systems safe for work and for classrooms. Class activities, in contrast, prioritize students doing their own work.2 Benefiting from unpaid and poorly paid labor in many other people to complete class assignments is ethically fraught. If you're using these tools to make your life easier it's worth thinking about whose lives they made harder.” - Dr. Katie Shelton.3

Honest conversations, not punishment, should lead a school district’s approach to generative AI with students.

Continuing the Conversation

Does your school district need help crafting an AI policy? I would love to work with you. Reach out on Twitter, email mistermullaney@gmail.com, or check out my professional development offerings.

Blog Post Image: The blog post image is by Walls.io on Unsplash.

AI Disclosure:

I wrote this post without the use of any generative AI. That means:

I developed the idea for the post without using generative AI.

I wrote an outline for this post without the assistance of generative AI.

I wrote the post using the outline without the use of generative AI.

I edited this post without the assistance of any generative AI. I used Grammarly to assist in editing the post. I have Grammarly GO turned off.

Critical Inkling is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

AI detectors are snake oil. They do not work.

Bold formatting was added by Tom.

I used this video and quote in my Humans Created This post.