I was a senior in my AP Bio class. The lab instructions called for looking into the microscope and counting the number of brine shrimp. I looked into the microscope. Brine shrimp darted rapidly. Counting them seemed an impossible task - especially for my senioritis-afflicted self. I quickly looked up and wrote “10” on the lab worksheet.

My teacher noticed the speed with which I “counted” and wrote the number. She asked, “Tom, how many brine shrimp are there?” My response?

“About ten.”

My teacher did not care for my response.

I tell this story to illustrate that precision is not my thing. I am grateful that vaccinologists, such as Dr. Kizzmekia S. Corbett-Helaire1 are precise, but precision is not a tool in my toolbox.

Further illustrating that I am usually not precise, this is my desk.

To hammer the point home - literally - this is what the other side of the wall looks like when I hang pictures.

Despite my precision-averse nature, I recognize we need precision when discussing “AI” with teachers and students. Misunderstandings can lead to mistakenly ascribing intelligence and other human characteristics (anthropomorphism) to generative “AI.”

The Baldwin Test “seeks transparency and something closer to truth by describing artifice” according to Northwestern University PhD candidate Charles Logan. The first commitment of the Baldwin Test is, “Be as specific as possible about what the technology in question is and how it works.”

Speaking With Precision About AI

This post has ideas for precise language when speaking about “AI.” Use these ideas to ensure accuracy and precision when communicating about “AI.”

These ideas build on my Shake Up Learning Summer Learning Series Vocabulary For Educators session.

Register2 for the Shake Up Learning Summer Learning Series all-access pass.

Additionally, earlier this year, I wrote an AI Vocabulary For Teachers post.

“Enter A Prompt” Instead Of “Ask”

We ask questions to each other. Entering text into a Large Language Model (LLM) or image generator is entering a prompt. “Enter this prompt into ChatGPT” prevents the anthropomorphism inherent with “Ask ChatGPT…”

“Generated” Instead Of “Said”

LLMs do not “say” or “tell” us anything. Say, “ChatGPT generated…” instead of “ChatGPT said…”

“Series Of Prompts And Text Generated”

I was shocked to learn that “An average user’s conversational exchange3 with ChatGPT basically amounts to dumping a large bottle of fresh water out on the ground, according to a new study.”

As much as I appreciate that research, “conversational exchange” is anthropomorphism.

Refer to it as a “series of prompts and text generated” instead.

“Generative AI Amplifies Bias” Instead Of “Generative AI Is Biased”

This is a subtle but important distinction. People are biased. Computers are not. Generative “AI” generates outputs (text and images) that amplify bias. Here are some examples:

Stanford study indicates AI chatbots used by health providers are perpetuating racism The Associated Press, October 20, 2023.

ChatGPT Replicates Gender Bias in Recommendation Letters by Chris Stokel-Walker for Scientific American, November 22, 2023.

Google Chatbot Refused to Say Whether Elon Musk Is Better Than Adolf Hitler by Noor Al-Sibai for Fortune, February 26, 2024.

Microsoft Engineer Sickened By Images Its AI Produces by Maggie Harrison Dupré for The Byte, March 7, 2024.

“Generate Accurate Text” Or “Generate Text We Deem Accurate”

LLMs do not intend to be correct or incorrect when generating text. They have no intention. When LLMs generate text we deem accurate, we as humans, construct that meaning.

Instead of “ChatGPT was correct about…” say “ChatGPT generated text we deem accurate about…”

“Generate Inaccurate Text” Or “Generate Text We Deem Inaccurate”

“Hallucinate” is a problematic term. When people say an LLM “hallucinates,” they mean it generates text they deem inaccurate.

Say “ChatGPT generated text we deem inaccurate about…” instead of “ChatGPT hallucinated.”

“Predict The Next String Of Characters”

When asked what LLMs do, or what they are designed to do, the answer is “predict the next string of characters.”

Avoid “Smarter.”

Professor of English and Comparative Literature at Rutgers University Dr. Lauren M.E. Goodlad wrote:

“The long and ongoing history of “AI,” including the data-driven technologies that now claim that name, remains riddled by three core dilemmas: (1) reductive and controversial meanings of “intelligence”; (2) problematic benchmarks and tests for supposedly scientific terms such as “AGI”; and (3) bias, errors, stereotypes, and concentration of power.” - Dr. Lauren M.E. Goodlad

I often see AI hype that says things such as, “The paid version of ChatGPT is “smarter” or “The latest version is smarter.” This is anthropomorphism. LLMs do not have consciousness or intelligence as humans and animals do.

Ideally, a company such as OpenAI would publish peer-reviewed data precisely documenting that a new version generates more accurate text than its predecessor. Unfortunately, OpenAI is as open as a locked door, so do not expect that any time soon.

Stanford researchers challenge OpenAI, others over AI transparency in new report by Benj Edwards for Ars Technica, Updated October 23, 2023.

OpenAI is getting trolled for its name after refusing to be open about its A.I. by Steve Mollman for Fortune, March 17, 2023.

Acknowledge The Exploited Workers Who Make “AI” Function.

I have seen “AI” professional development that includes text saying “AI” can “carry out complex tasks with little or no human intervention.”4

That ignores the exploited workers who are constantly working to make “AI” function. For more information about these workers:

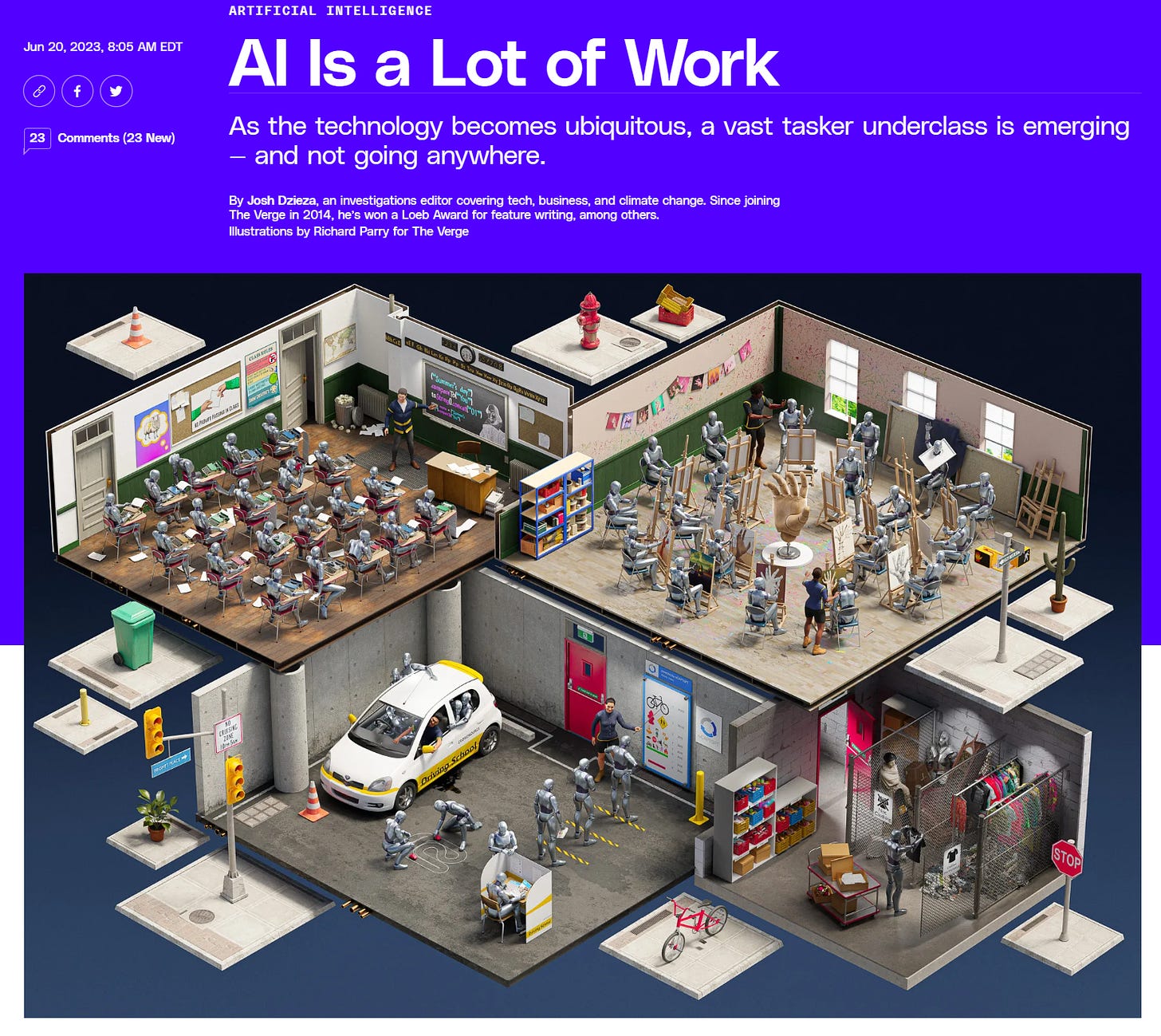

AI Is a Lot of Work by Josh Dzieza for The Verge and New York Magazine, June 20, 2023.

As the Distributed AI Research Institute (DAIR) Data Workers’ Inquiry states, “Data work, that is, the labor that goes into producing data for so-called “intelligent” systems (Miceli et al., 2021), not only brings with it unprecedented forms of exploitation but, crucially, possibilities for organization and resistance in globally networked economies.”

As previously mentioned on Critical Inkling, Ninety-seven Kenyan workers who work as data labelers for companies, including OpenAI, wrote an open letter detailing their working conditions to President Joe Biden. From the letter:

“We label images and text to train generative AI tools like ChatGPT for OpenAI. Our work involves watching murder and beheadings, child abuse and rape, pornography and bestiality, often for more than 8 hours a day. Many of us do this work for less than $2 per hour.”

“These companies do not provide us with the necessary mental health care to keep us safe. As a result, many of us live and work with post-traumatic stress disorder (PTSD). We weren’t warned about the horrors of the work before we started.”

When discussing “AI” with students and teachers, please recognize these exploited workers. “AI” cannot “carry out complex tasks with little or no human intervention.”

Attribute Agency To The Humans.

The fourth commitment of The Baldwin Test is “Attribute agency to the human actors building and using the technology, never to the technology itself.”

The best example of this is the problematic term,“Halluciantion.” It sounds like the LLM went off on its own and did something wrong.

The problem with this is that as Tim Allen, a principal engineer at Wharton Research Data Services, said:

"A lot of AI folks like to say models "hallucinate." This removes responsibility for algorithm coders and model trainers. These models do not hallucinate. They make stuff up because a programmer using a random generation function decided they should.” - Tim Allen, Wharton Research Data Services

Always attribute agency to humans, not computers.

Continuing The Conversation

What do you think? What suggestions do you have for being precise about

"AI”? Comment below or Tweet me at @TomEMullaney.

Does your school or conference need a tech-forward educator who critically evaluates AI? Reach out on Twitter or email mistermullaney@gmail.com.

Blog Post Image: The blog post image is a mashup of two images. The background is Photo by Skitterphoto from Pexels. The robot is Thinking Robot bu iLexx from Getty Images.

AI Disclosure:

I wrote this post without the use of any generative AI. That means:

I developed the idea for the post without using generative AI.

I wrote an outline for this post without the assistance of generative AI.

I wrote the post from the outline without the use of generative AI.

I edited this post without the assistance of any generative AI. I used Grammarly to assist in editing the post. I have Grammarly GO turned off.

From Hillsborough, NC where I lived for a minute.

This is an affiliate link. I receive a portion of any sales generated with this link.

Bold formatting was added by me.

This is meant as a critique of an idea, not a person. I will keep the presenter’s name to myself.